To assess method performance, we acquired 150 photobombed images and developed corresponding logical masks to highlight unwanted elements. Custom edited images, used as ground truth, were then created for each photobombed image using tools like Adobe Photoshop, Microsoft Paint, and Cleanup Pictures. Image collection involved manual sourcing from online platforms such as Facebook , Getty Images, Bored Panda, Adobe Stock, Shutterstock, and Pinterest. These images were annotated using MATLAB's "Region of Interest" function to craft logical masks, employing a loop for automation.

The "FreeHand" function aided in marking regions iteratively until binary masks were obtained. Annotation time averaged about 50 - 60 seconds per image, varying with object count. The resulting photobombed images and masks were subsequently employed to test different inpainting methods for removing undesirable areas.

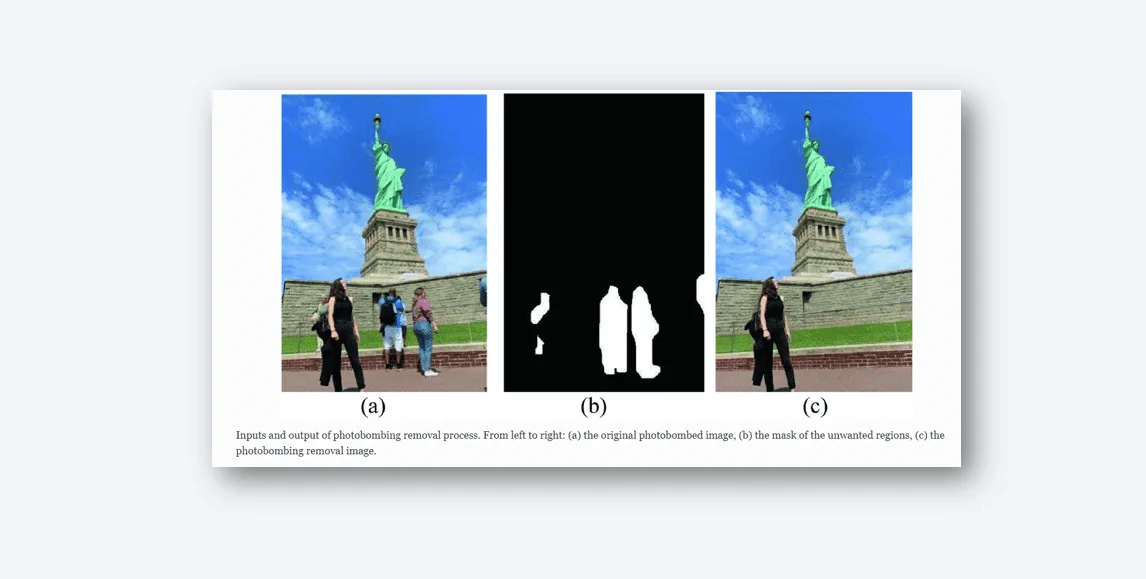

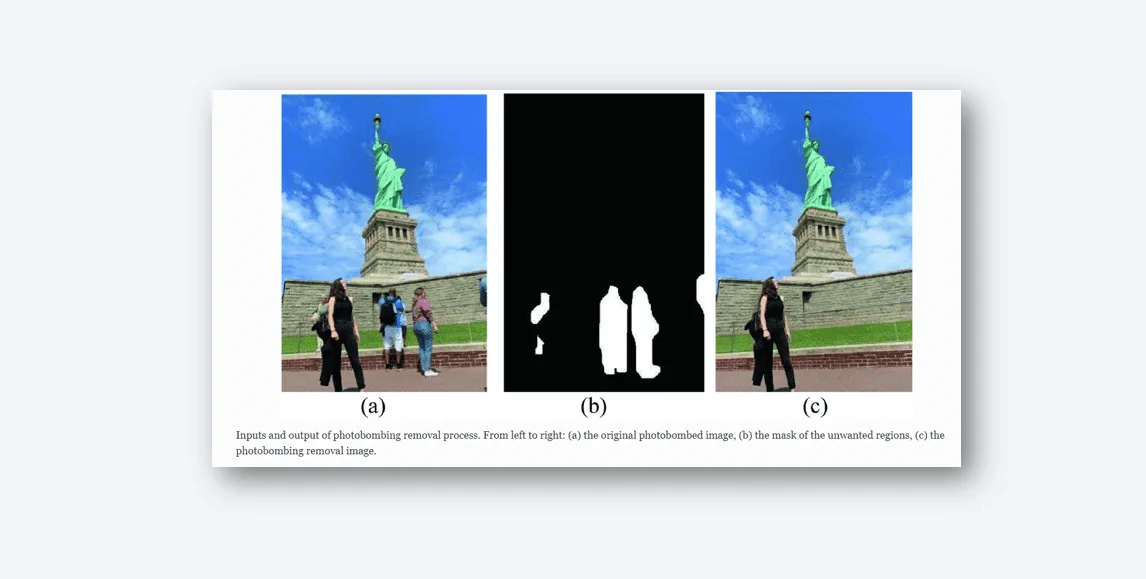

The image that has been photobombed, along with its corresponding mask, is utilized as input for different inpainting techniques. These methods aim to reconstruct the image. Various methods including Exemplar-Based Image Inpainting (EBII) , Coherence Transport (CT), Fast Marching (FM), Fluid Dynamics (FD), Gated Convolution (GC), and Resolution-robust Large Mask Inpainting with Fourier Convolutions (LaMa) are employed to identify the most effective approach. This process involves feeding the photobombed image and its mask into these methods, resulting in the removal of unwanted elements and restoration of the original image. EBII and CT are executed using Matlab, while FM and FD are implemented using OpenCV. The GC method utilizes a pre-trained model from Github, and LaMa's available author's code is also employed. Subsequently, these techniques are applied to the testing set, generating images devoid of photobombing. These images are then compared to the ground truth using various metrics, elaborated upon in the subsequent section.

To evaluate the outcomes of all techniques, the manipulated images produced through photobombing removal are juxtaposed against meticulously adjusted reference images. Numerous assessment criteria are employed to gauge the efficacy of the photobombing removal results. Specifically, the evaluation involves metrics such as the Fréchet inception distance (FID) , Structural Similarity Index (SSIM) , and Peak-to-Noise Signal Ratio (PSNR) , which aid in comparing the reconstructed images with the custom-edited reference images. Additionally, we introduce the Texture-based Similarity Index (TSI) using the concept of Local Binary Patterns (LBP) [22].

The evaluation of various inpainting techniques on our dataset focuses on assessing their ability to remove photobombed regions while maintaining overall image quality. Key metrics including FID, SSIM, and PSNR are utilized to gauge different methods against the ground truth. A novel metric, Texture-based Similarity Index (TSI), is introduced. Performance analysis, reveals LaMa as the most effective, boasting the best scores for FID, SSIM, and PSNR, along with the highest average rank based on TSI. LaMa's success is attributed to its efficient receptive field utilizing fast Fourier convolutions (FFCs) and a dedicated loss function. In contrast, GC lags due to its inability to effectively reconstruct masked regions with trivial pixel details. LaMa's superiority is further evident in the graphical representation of results.

The efficacy of inpainting methods concerning FID scores relative to the percentage of the region to be inpainted. Notably, all methods perform well in the 0-10% range, with LaMa consistently outperforming others across all percentages. Conversely, the performance of EBII drops in certain ranges, while FM maintains average performance. A direct relationship between FID score and inpainting region percentage is observed, highlighting LaMa's consistent prowess.

Getting rid of photobombs, you know, those annoying and distracting things that sneak into our pictures, is a bit of a puzzle. But it's super important because we all want our pictures to look great without any surprise guests, right? So, here's what we did: we started with pictures that had these sneaky intruders. Then, we made outlines of the stuff we wanted to kick out. After that, we played matchmaker by pairing up these pictures and their outlines and handed them over to some smart computer models that know how to fill in the missing parts. We then used fancy measuring tools to see how well these models did, comparing them to the real deal. And guess what? Our experiments showed that using these clever models really helps to zap those photobombs. We're pretty excited about this, and we're pretty sure this is just the beginning.

In the future, we're planning to make our toolkit even bigger and better for even cooler results. So, stay tuned! 📸🚫🙅♂️

This research was supported by the National Science Foundation (NSF) under Grant 2025234.

Sai Pavan Kumar Prakya, Madamanchi Manju Venkata Sainath, Vatsa S. Patel, Samah Saeed Baraheem & Tam V. Nguyen.

Department of Computer Science, University of Dayton, Dayton, OH, 45469, USA